TL;DR: Major AI companies are racing to build the world’s biggest data centers in a hail mary attempt at AGI. We don’t know whether that will be enough to build AGI. But what seems certain, if you look 2 moves ahead, is that we’re already in the first stages of an AI-driven cold war with China. That story is going to be the backdrop of the next decade. This post is based on a talk I gave at the Berghs School of Communication Unconference on AI.

There has been an explosion of news about this over the last week. AI expert Gary Marcus claims this is the end for growing AGI through LLMs. Just yesterday, Reuters broke this story about how the big AI labs are having to change research track. Online communities are buzzing with back and forth debate on this. As usual online, there’s a lot of hype and noise out there.

But it’s really important for us to understand this story, as communicators. It’s not just an academic question.

I’ve written before about how the debate about AI is going to affect the operating environment for all of us communicators, just as sustainability has. What happens in AI is going to affect every aspect of the information environment we work in.

So even if you don’t work in AI policy, this is important to know.

In this post, we will:

- Define what AGI is, and AI winter would be

- Look at the promises and hype around AGI

- Lay out what would be necessary to make AGI happen

- Examine the challenges we know stand in the way of AGI

- See how this leads almost inevitably to great power competition between China and the USA

Are we on the verge of AGI?

Building Artificial General Intelligence, AGI, is the explicit goal of the major AI companies. This means general-purpose AI with human-like adaptability and reasoning; a machine intelligence advanced enough that it can replace any remote worker on a 1:1 basis.

If we succeeded in building AIs like this, they could be run in thousands or millions of instances, round the clock, creating what Dario Amodei has called “A country of geniuses in a datacenter”.

He and other AI leaders are bullish about what such a concentration of geniuses would enable. In fact, the future is so bright it’s blinding. Huge economic growth, medical breakthroughs up to and including curing death, merging with the AI, living forever in simulated bliss and colonizing the reachable universe. The future AGI would bring about is so wild that the human mind recoils. This is a bug in human reasoning – we’re famously bad at appreciating exponential growth.

It’s easier to dismiss as hype.

And it seems many people are reacting to the wild visions of the AGI-powered future as hype.

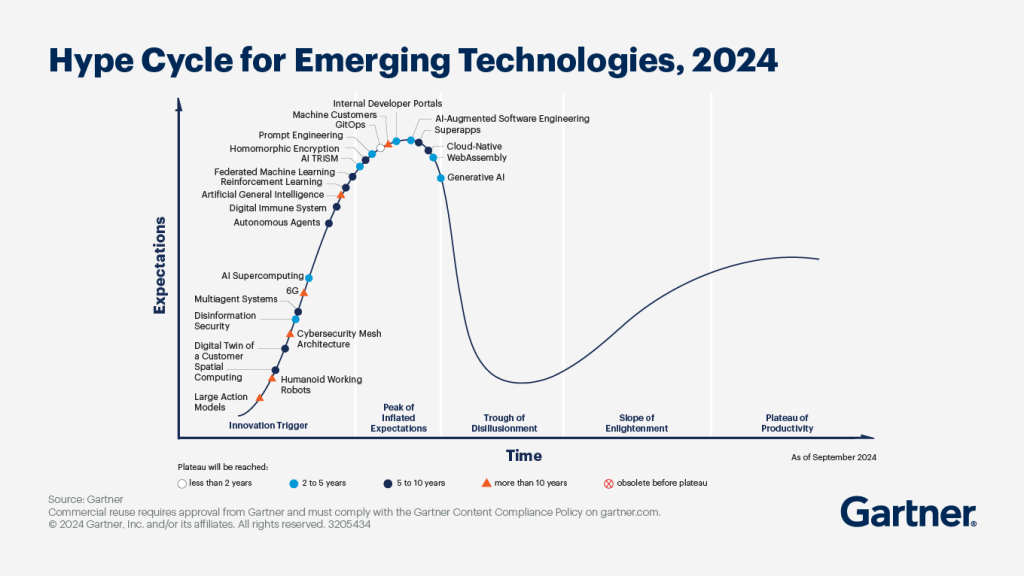

The Gartner Hype Cycle for 2024 has GenAI sliding down the trough of disillusionment and AGI not far off the peak.

Figure SOURCE

. . . So it’s time for an AI Winter?

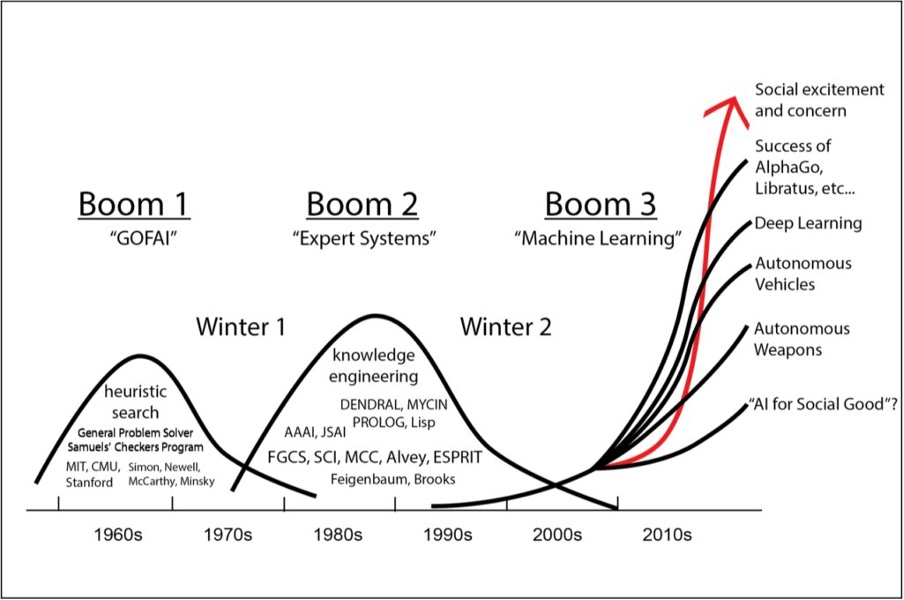

Each previous AI winter followed a period of hype, often driven by technological limits and unrealistic promises.

In the history of AI development, we’ve had a few of these periods of stagnation in AI development, marked by waning enthusiasm and investment due to unmet expectations.

Figure SOURCE

But is it different this time? What’s the case for AGI soon?

In a nutshell: scaling laws.

“To a shocking degree of precision, the more compute and data available, the better [AI] gets at helping people solve hard problems.” Sam Altman

Scaling Laws FTW

What we’ve discovered, and what Altman was talking about in that quote above, is that the more compute you dedicate to training AI, and the more data you train it on, the better it gets.

With incredibly consistent results.

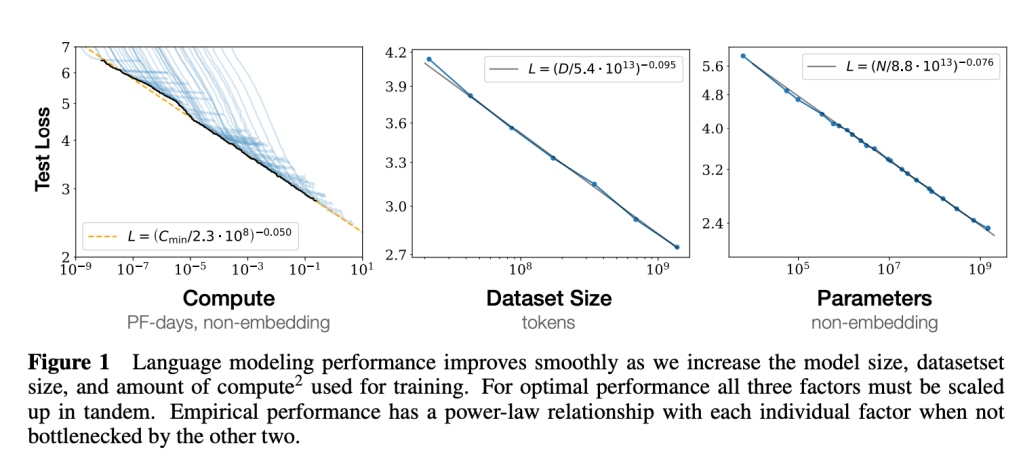

See this paper for a technical discussion of this.

Figure SOURCE

These graphs show how test loss decreases smoothly and predictably as you scale up inputs to creating AI models. Note though, that the x-axis on those graphs isn’t linear. It’s exponential. Each step to the right involves a 10x increase in compute, dataset, or parameters.

So the scaling laws appear to hold, but you have to massively scale up your inputs to make them do so.

The real question is – how far do they hold? And will they hold long enough – is this all we need to create AGI?

Previously, Dario Amodei, Sam Altman and other AI leaders have appeared quite optimistic on this in public.

But they would.

Their investments depend on this enthusiasm. Nobody is going to give you a hundred billion dollars if you aren’t 100% committed to your crazy idea.

That is a Dr. Evil amount of money.

The challenges on the road to AGI

Scaling laws have held so far. And if they continue to hold – and that’s a big ‘if’ – then all we need to do is train AI on more and more compute, and it’ll get smarter and smarter.

Well – ‘all we need to do’ is glossing over a lot. Even if it’s just a question of training scale, there are some challenges ahead.

Let’s take a look at them.

Chips are hard to make . . .

Making the chips necessary for advanced AI training is technically very difficult.

They are among the more sophisticated objects that humans have ever built, and only a handful of global players are actually capable of developing them, creating a bottleneck that affects the entire industry. (This concentration makes chips a key point of leverage in AI governance. Unlike data or software, chips are tangible and restricted by physical limits in manufacturing and distribution.)

The upshot of this concentration means that if you’re trying to 10x the compute in your next AI training run, you can’t just go down to Walmart and stock up on the chips you need.

Outside the industry, it’s easy to miss just how hard it is, and how expensive it is, to get enough chips for scaling AI models. These frontier devices are so advanced that physically delivering the first of a new generation of chips is considered worthy of a multi-CEO photo op.

So if you’re an AI developer, and you’re trying to get enough chips to increase your training runs 10x or 100x, this isn’t a trivial expansion—it’s a multibillion-dollar infrastructure investment that only a few global players can afford.

. . . Data centers are power-hungry . . .

Chips go in data centers, and as demand for data centers is exploding, their power requirements are soaring. This is the second bottleneck to AGI development.

Currently, U.S. data centers consume about 4% of national energy production, but Goldman Sachs forecasts that this figure could double to 8% by 2030.

Some experts, like Leopold Aschenbrenner, predict it could reach as high as 20%, requiring an additional 100 GW of energy capacity, at a cost of over a trillion dollars (larger than the budget for the US military).

If you think this is outrageous – well, it is. But it’s already happening.

In Ireland, for example, data centers consumed 18% of the nation’s electricity in 2022, matching the energy use of all urban housing combined.

That’s right, data centers in Ireland consume as much energy as all the houses in Irish cities. Not in the future – today.

This strain has led EirGrid, Ireland’s state power provider, to impose a moratorium on new data centers in the Greater Dublin Area until at least 2028.

. . . and all this is going to cost a lot of money.

Accommodating these demands will require vast levels of investment. OpenAI and Microsoft are already planning the Stargate data center, at an eye-watering cost of USD $100 billion.

This is a taste of things to come.

The level of investment we’re looking at here means the upcoming infrastructure push for data centers and compute could surpass even iconic projects like the Apollo Program, the Manhattan Project, or Britain’s railway expansion in the 19th century.

For historical context, Britain’s railway expansion from 1841 to 1850 consumed roughly 40% of British GDP, a scale that would equal around $11 trillion for the U.S. over a decade.

The Apollo Program and Manhattan Project—two of the most ambitious American undertakings—required funding equivalent to around 3% and 2% of GDP, respectively.

It is highly relevant that both the Manhattan Project and the Apollo Programme were propelled by the USA racing against an external rival – the Nazis, and the Soviets, respectively.

It seems like this pattern is about to repeat itself, between the USA and China.

But there’s one more question we need to answer before we talk about the coming Cold War.

What if we need new science, too?

Can we create AGI if we ‘just’ scale up our existing systems? Large Language Models, our current generation of AI, are built on several breakthroughs in computer science, such as

- Deep neural networks: machine learning

- Convolutional neural networks: computer vision

- Transformers: language modelling

Do we need another breakthrough on the level of these to get to AGI? Or maybe several?

The simple answer to this is – we don’t know.

Leading AI experts offer a mixed view:

[Demis Hassabis, founder of DeepMind] “I think there’s a lot of uncertainty over how many more breakthroughs are required to get to AGI, big, big breakthroughs — innovative breakthroughs — versus just scaling up existing solutions. And I think it very much depends on that in terms of timeframe. Obviously, if there are a lot of breakthroughs still required, those are a lot harder to do and take a lot longer. But right now, I would not be surprised if we approached something like AGI or AGI-like in the next decade.”

[Andrew Ng, Founder of Google Brain] “I see no reason why we won’t get there someday. But someday feels quite far, and I’m very confident that, if the only recipe is scaling up existing transformer networks, I don’t think that will get us there. We still need additional technical breakthroughs.”

[Yann LeCun, Head of AI at Meta] “It’s not as if we’re going to be able to scale them up and train them with more data, with bigger computers, and reach human intelligence. This is not going to happen. What’s going to happen is that we’re going to have to discover new technology, new architectures of those systems. Once we figure out how to build machines so they can understand the world — remember, plan and reason — then we’ll have a path towards human-level intelligence.”

Many other AI researchers and thought leaders (including Sam Altman) have gone on the record sying that new science will be needed. But there are already many promising avenues of research, and some of the smartest computer scientists in the world are working on this.

We don’t know when it will happen. But here’s what we do know.

Here is a breakdown of what is extremely likely:

Politically, with Trump’s win in the USA, the environment for leading-edge AI development in the USA is going to change. Shakeel Hashim at Transformer had an excellent breakdown of this. Trump has said he’ll rescind the Biden Executive Order on AI, which is currently the US’ leading document on AI policy. Elon Musk will likely be at Trump’s elbow as de facto AI advisor – Musk is pro-AI safety, but he’s also pro-business and definitely pro USA, so it’s unlikely that this will result in a slowdown of AI development in the USA.

In business, the above makes it likely that we’re about to see something like the unprecedented investments in compute and energy that I outlined above.

Internationally, this means that we’re about to enter a new cold war.

Great power competition

Under Trump’s presidency the pace of US investment in AI technology is going to increase, not slow down. There’s no way that China is sitting this one out. For either superpower, letting the other develop AGI without competing is unthinkable.

Think of what a military technology advantage can do. In 1991, the American-led coalition in the first Gulf war obliterated the Iraqi military in 100 hours of ground operations, suffering 292 dead, compared to 20-50 thousand Iraqi dead. The coalition lost 31 tanks, the Iraqis 3000. That is what a technological advantage can give you in a shooting war.

This is to say nothing of the potential advantages that await those who can successfully deploy AI in cyberwarfare or online espionage. This type of mathematics tends to concentrate the mind.

China has mobilized substantial resources towards AI research, in defiance of the USA’s embargo on exporting advanced chips there. They’re also pushing hard to get to AGI first.

This has obvious echoes of the nuclear arms race from the first cold war. And, just as in that war, you can bet that both the USA and Chinese intelligence and diplomatic corps are focusing on this problem too. Technology can be stolen. Imports can be blocked.

And, in case intelligence and diplomacy fail, powerplants can be sabotaged. Mines producing rare metals can be occupied. Shipping lanes can be blockaded.

Datacentres can be bombed.

This is one reason why TSMC, the world’s largest producer of computer chips, is building facilities in the continental USA.

The overwhelming advantage accruing to the country that first develops AGI motivates the kind of existential threat that led to doctrines from the first cold war like mutually assured destruction.

We’ve been in this dynamic before.

Looks like we’re going to be in it again.

Effects on the media environment

Separately to the probability for a new cold war, we should be worried about how information technology has affected democracies and autocracies.

In the 1990’s, we thought that the spreading internet would inevitably open up China and Russia.

Instead, we’ve seen how China has applied information technology to build a Great Firewall and create an online climate structured to the values of the Party.

Meanwhile, far from strengthening our own democracies, we’ve seen social media intensify political divisions and make stable government more difficult – aided, in fact, by the intelligence services of Russia and other authoritarian actors throwing their disinformation bombs into the factional information mix.

Why would we think that AI will reverse this trend?

Thirty years ago, Francis Fukuyama wrote about The End of History and the final triumph of liberal democracy. That hasn’t happened yet. In fact, since Fukuyama wrote, the adoption of information technology has weakened democracies and strengthened authoritarian regimes. With the probability of AI-supported disinformation in the mix, this situation is only going to become more volatile.

Conclusion

We don’t know for sure what’s coming next when it comes to AI. We could see AGI as soon as next year, if the most optimistic predictions come true. Alternatively, it might be so hard to do that most of us won’t see it in our lifetimes. Most AI researchers seem to think it’s quite likely we’ll have AGI at some point in the next decade, maybe two.

Whatever these timelines turn out to be, we’re in for a wild ride. Progress won’t slow down, despite what some are asking for. No – rather the opposite. Change will never be this slow again.

For us as communicators, it means we need to stay on this issue and get fluent about it, because it’s going to form the backdrop of the global political and business environment we all operate in.